Building LLMs.txt for the social sector

Another blog on the ins and outs of something I built, what it's all about, and why it might be a useful tool and/or concept. A llms.txt tool designed for specific use cases.

Or as I call it "AI find my organisation and be accurate please"

This is another in a series of blogs exploring things I've built, lifting the lid on both the technical and conceptual ideas behind them. I hope it helps us in the social purpose sector think about technology & AI as more than chat interfaces, and that we perhaps should be looking at things on a broader level.

This time I want to talk about infrastructure and boring tiny tools. Nope, no flashy chat here I'm afraid. Instead, I want to explore something much more foundational: how do we make the work of social purpose organisations visible and accessible to AI systems in the first place?

What is llms.txt?

Before we get into what I've built, let's talk about the problem I'm trying to solve.

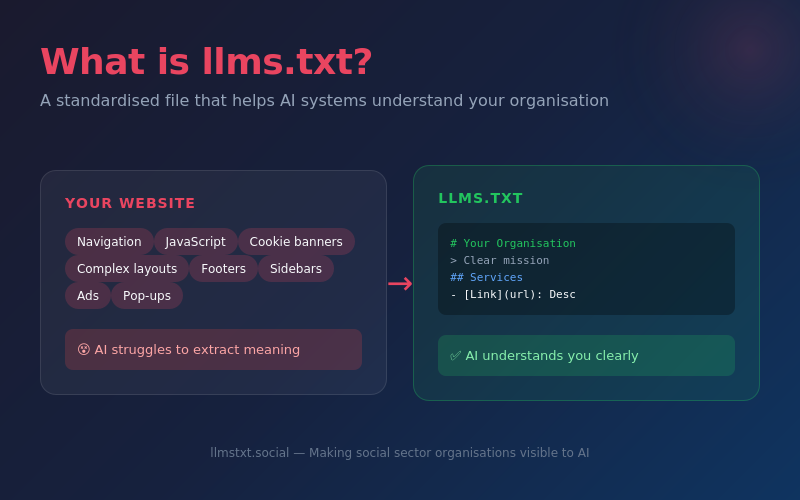

AI systems - the large language models (LLMs) that power everything from ChatGPT to Claude to various coding assistants - increasingly need to understand websites. Whether it's answering questions about an organisation, helping someone find support services, or assisting a funder in understanding who's working in a particular space, these systems need to get information from somewhere.

The problem is that websites are designed for humans, not machines. They're full of navigation menus, JavaScript, cookie banners, and complex layouts. Converting a modern website into something an AI can actually understand and use is surprisingly hard. And even when you do extract the text, context windows (the amount of information an AI can process at once) are limited - and although they are increasing, you can't just feed it an entire website and hope for the best.

This is where llms.txt comes in. It's a proposed specification - created by Jeremy Howard at Answer.AI - for a simple markdown file that sits at `/llms.txt` on your website and provides a curated, AI-friendly summary of who you are and what you do. Think of it as a "robots.txt for AI" - but instead of telling crawlers what not to index, it tells AI systems what they actually need to know about you.

A standard llms.txt file has a specific structure: a title, a short description, some context, and then links to more detailed information organised into sections. It's human-readable (it's just markdown) but also machine-parseable, which means tools can work with it programmatically.

Why does the social sector need this?

So we've already seen the data, showing that traffic from traditional sources (search engines) is decreasing, as people use LLMs as search, and so organisations without good AI-readable content will become increasingly invisible. If someone asks an AI assistant "what charities support refugees in Newcastle?" and your organisation doesn't show up because the AI couldn't understand your website properly, you've lost an opportunity to help someone.

But there's a bigger picture too. If the social sector wants to build towards a future where data flows more freely - where funders can more easily understand the landscape, where collaboration happens because people can actually find each other, where we stop reinventing wheels because we didn't know the wheel already existed, then we need to consider things like this.

llms.txt is a small piece of infrastructure that supports this vision. It's a way for organisations to represent themselves clearly and consistently to AI systems, in a format that's standardised enough to be useful but flexible enough to capture the nuance of what social purpose organisations actually do.

What I've built (and why it's different?)

I built the tool to generate llms.txt files automatically. It's called llmstxt-social and it exists in two forms: an open-source command line tool that anyone can run themselves, and a web-based service for those who'd rather not deal with the technical bits.

So, if you hit google(other search engines are available ) and type in llms.txt generator, there are many. So why build another one? Well, the other generators are just based on the original llms.txt specification which is quite general - it was designed with technical documentation in mind. Our version adapts this for social purpose organisations with some important additions.

Templates for different organisation types. A charity has different information needs than a funder, which has different needs than a local authority. We have four templates - charity, funder, public sector, and startup - each with sections that make sense for that type of organisation.

For instance, our charity template includes:

- For Funders section: registration number, geography, themes, beneficiaries - exactly the information a grant-maker needs to assess fit

- For AI System section: explicit guidance on how AI should represent the organisation, including caveats and things to avoid

- Data enrichment- We don't just scrape the website - we pull in data from external sources to provide verified context:

- Charity Commission data (official registration, financial information, charitable objects)

- 360Giving data for funders (grants history, typical award sizes, geographic distribution)

- This means the llms.txt file contains information that might not even be on the website, but is crucial for understanding the organisation properly.

- Quality assessment. We don't just generate a file and leave you to it. The tool includes a comprehensive assessment system that scores completeness, checks for missing sections, and provides actionable recommendations for improvement.

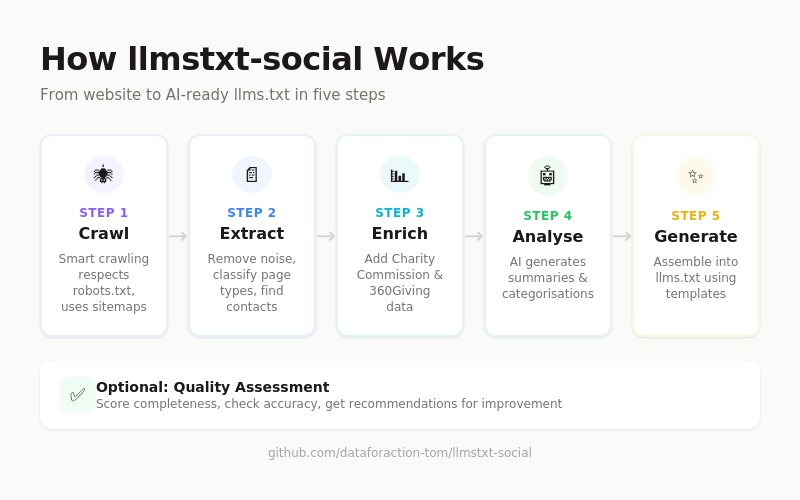

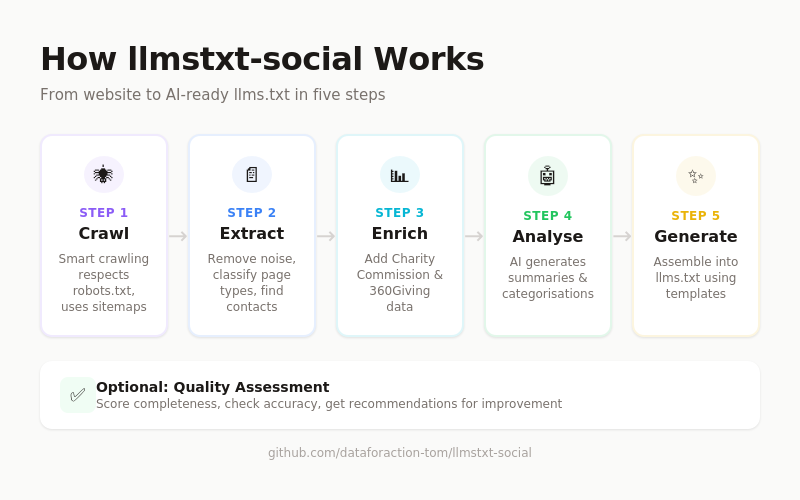

How it actually works

Let me break down what happens when you run the tool:

Step 1: Crawling

We start by crawling the website. This sounds simple but involves a fair bit of care:

- Respecting robots.txt (we're good AI citizens)

- Using sitemap.xml if available (many sites have one but don't realise it)

- Falling back to link discovery if not

- Rate limiting to avoid hammering servers

- Optionally using Playwright for JavaScript-heavy sites that don't render properly otherwise

We typically limit this to around 30 pages - enough to get a good picture, not so many that we're processing irrelevant content.

Step 2: Content extraction

Each page gets processed to extract just the actual content:

- Removing navigation, headers, footers, scripts, styles

- Classifying the page type (about, services, contact, projects, etc.)

- Extracting contact information

- Finding charity numbers

This classification is important - it means we can group related pages together sensibly in the final output.

Step 3: Data enrichment

If we've found a charity number (or one was provided), we call the Charity Commission API to get official data:

- Registered name and status

- Registration date

- Latest financial information (income and expenditure)

- Charitable objects (the formal statement of what the charity does)

- Trustee information

- Contact details

For funders, we can also pull 360Giving data showing their actual grants history - incredibly useful for applicants trying to understand what a funder really supports versus what their website says (or doesn't say) they support.

Step 4: AI analysis

Now comes the LLM bit. We feed the extracted content and enrichment data to Claude and ask it to:

- Write a concise mission summary

- Generate clear descriptions for each page

- Categorise services and programmes

- Identify target beneficiaries

- Create guidance for how AI systems should represent the organisation

This is where the 'magic' happens, but it's also where careful prompting matters. We use structured outputs (schema validation) to ensure the AI returns data in a consistent, predictable format rather than getting creative with the structure.

Step 5: Generation

Finally, we assemble all this into the llms.txt file using the appropriate template. The output follows the spec strictly - H1 title, blockquote description, H2 sections with markdown lists of links and descriptions.

Step 6: Assessment (optional)

If you want, we'll then assess the generated file against what we know about the organisation:

- Is it complete? Are expected sections present and populated?

- Is it accurate? Does it align with the enrichment data?

- Is it useful? Will an AI actually be able to use this effectively?

We score out of 100 and provide specific recommendations for improvement.

Provider agnosticism (again)

Just like with Open Recommendations, I've built this to be AI provider agnostic. The tool currently defaults to Claude, but the architecture supports switching to other providers. Given how rapidly the AI landscape is changing, this flexibility is essential.

Different models are better at different things. The extraction and classification tasks could potentially run on smaller, cheaper models. The summary generation benefits from more capable models. Having the flexibility to mix and match - or to switch entirely if pricing or availability changes - is crucial for sustainability.

The subscription model: monitoring for change

Here's something that took me a while to figure out: llms.txt files need to stay current. Organisations change - new services launch, old ones close, contact details update. A stale llms.txt file is arguably worse than no file at all.

So i've built a subscription tier that monitors your website and automatically regenerates your llms.txt file when things change significantly. It:

- Periodically recrawls your site

- Compares new content against the existing file

- Regenerates if meaningful changes are detected

- Tracks change history so you can see what evolved

This costs £9/month - priced to be accessible to small organisations while covering the actual costs of running the infrastructure (hosting, API calls, monitoring).

Open source vs paid service

I want to be clear about the model here because I think it matters. No-one funds me to do this stuff.

The core tool is open source (MIT licensed). You can clone the repository, run it on your own machine, generate as many llms.txt files as you want, and never pay us a penny. If you're technically capable and have an Anthropic API key, there's nothing stopping you from doing everything yourself.

The web service exists for convenience and for features that need ongoing infrastructure - like the monitoring subscription. The free tier gives you 10 generations per day (basic output). The paid one-off option (£7) includes full assessment and enrichment. The subscription (£9/month) adds monitoring and automatic updates.

The paid tiers are priced to cover costs - server hosting, API calls to Claude and the Charity Commission, database storage, monitoring infrastructure - plus a small margin to make this sustainable. We're not trying to extract maximum value; we're trying to make this genuinely accessible while keeping the lights on.

What I've learned and what I'm trying to do with this stuff

The things I'm building and experimenting with at the moment are a quite intentional pushing at the edges and provocations for thinking about AI and us. Things I think (maybe):

Infrastructure matters more than features: The social sector loves talking about AI for efficiency, AI for programme delivery, maybe for analysis. That's all important. But if our organisations aren't visible and correctly represented to AI systems in the first place, none of that matters. We need to invest in the boring plumbing as much as the shiny features.

Standards are valuable even when imperfect: llms.txt is still a proposal. It might evolve, it might get superseded. But having something standardised to build on is immensely valuable. Perfect is the enemy of good, especially in infrastructure, minimum viable standards etc...

The 80/20 split is real: No, I'm not talking about my running training. As with Open Recommendations, about 80% of this project is not the AI part. It's the crawling logic, the validation, the error handling, the user interface, the subscription management, the deployment configuration. The AI is the exciting bit that makes it possible, but the engineering around it is what makes it usable.

Open source plus commercial can work?: Can it? This is a test. Now of course there are many good examples of this actually working at scale, but what about small scale. I'm a bit nervous/curious about this model - will people resent the paid tiers? Would it feel like bait-and-switch? So far, no, but it's early days and I've managed to cover 2 months infrastructure costs, will that keep up? Being transparent about what's free and what costs money, and being honest about why things cost money, will hopefully work. But probably it all comes down to value, and if people don't value the tool, the model doesn't really matter.

How to get started

Anyway, if you've made it this far, maybe you want to try it out

For the technical folks: Clone the repo, install the dependencies, set up your Anthropic API key, and run `llmstxt generate https://your-website.org.uk`. Full instructions in the README.

For everyone else: Visit https://llmstxt.social, paste in your URL, and click generate. The free tier will give you a basic llms.txt file. If you want the full assessment and enrichment, there's a one-off payment option. If you want ongoing monitoring, there's a subscription.

Either way, you'll end up with a file you can add to your website at `/llms.txt`. If you're using a CMS that makes this awkward, you can also link to it from your homepage or put it in a place you can reference.

Why this matters

I'll be honest - this isn't glamorous work. No one's going to get excited about infrastructure files for AI systems. There are no pretty dashboards, no impressive demos, no "look what AI generated" moments to share on social media.

But I genuinely believe this kind of foundational work is essential. As AI becomes more embedded in how people find and understand organisations, we need the social sector to show up properly. Not just to be visible, but to be represented accurately - with the nuance and context that our work deserves.

llms.txt is maybe one small piece of that puzzle. It's a way for organisations to take control of their AI representation rather than leaving it to chance. And by building tools that make it easy to create and maintain these files, I hope we're lowering the barrier enough that this becomes normal infrastructure for the sector.

And ff you have questions, thoughts, or want to try something like this and/or collaborate, give me a shout on tom@good-ship.co.uk or on the linky